( The diagram above ties all the individual essays together, it's helpful to keep it in mind as you read through.

Complementing this diagram and a necessary reading before proceeding with any essay in the publication, including this one, is the Reader's Guide. It is aimed at helping readers understand some of the concepts developed throughout the entire publication and avoiding repetition between the individual essays. Please look at it now, if you haven’t already; you can also review the guide at any later time through the top menu on the publication's home page.

If the Reader's Guide functions as a Prologue, the post Personal AI Assistant (PAIA) functions as an Epilogue; in that post, we begin to construct a formal approach to democratic governance based on the PAIA. We may even go out on a limb and attempt to pair that democratic governance with a more benign form of capitalistic structure of the economy, also via the PAIA.

There are two appendices to the publication, which the reader may consult as needed: a List of Changes made to the publication, in reverse chronological order, and a List of Resources, containing links to organizations, books, articles, and especially videos relevant to this publication.

The publication is “under construction”, it will go through many revisions until it reaches its final form, if it ever does. Your comments are the most valuable measure of what needs to change. )In this article, we cover the Align arrow in the top diagram. The Large Language Models (LLMs) are the most representative examples of our current AI, so these LLMs are what we mostly mean by AI in the title of the publication: “AI, Democracy, Human Values, … and YOU”.

When we use the term LLM we many times refer to a stronger subclass of systems named Generative Large Language Multi-Modal (GLLMM) models, appropriately nicknamed Golems. These models are multi-modal in the sense that they are trained on more than just text data, so they are stronger than LLMs. They can also understand and generate images, audio, and video. GPT-4 from Open AI is the best-known example of a golem.

Our main goal in this essay is to cover the alignment of these golems with human values, arguably the main problem of Artificial Intelligence. We will make the point that LLMs, and golems in particular, while not repositories of knowledge itself, are rather awesome interfaces to knowledge, with a remarkable impact on how humans will access knowledge.

Most people have already used ChatGPT or a similar product and the awesomeness of these interfaces is familiar by now. We’ll rely on this familiarity to also make the point that the personal AI assistant (PAIA) introduced in the Free Will and Democracy and Man's Search for Relevance essays and summarized at the bottom of every essay in the publication will not only link to such LLMs, but go an important step further.

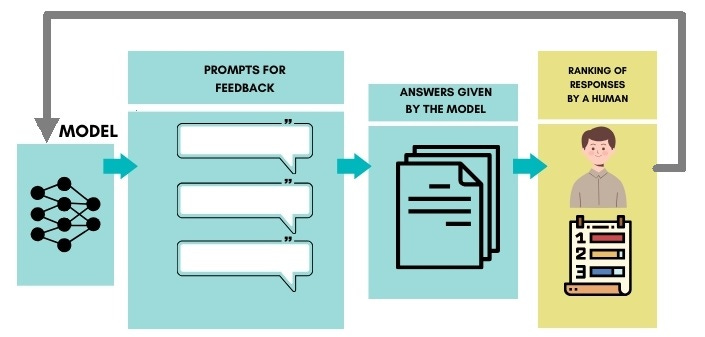

This step is a natural extension of the main method used by AI labs to align the LLMs with human values, a method named RLHF (Reinforcement Learning with Human Feedback). We will spend considerable time in this essay to explain it, but suffice it here to mention that it consists of human annotators designing prompts for the LLMs and rating the responses given by LLMs to those prompts, which ratings are then used to further refine the models.

Now we can explain how a PAIA will extend RLHF, the main technique of our entire publication. You should know that we are looking ahead a bit, this extension does not yet exist. A PAIA will know you well, one may argue that it may know you better than yourself. It is constantly learning YOUR values.

Therefore, it can apply RLHF and further refine YOUR interfaces to knowledge, by scoring prompts designed specifically with you in mind. This is a very powerful idea, one which I will use repeatedly to substantiate the view that AI does not have to be this frightening dystopic version of the future, but will be (if we don’t blow ourselves up in the interim) your best ally in finding your place and your relevance in the world.

The argument that this search for relevance is the main human motivation in the age of AI is made in the essay Man's Search for Relevance.

Golems

Referring to GLLMMs as golems is not merely a whimsical linguistic twist but a powerful metaphor that captures the essence of these models' complexity. In mythology, golems are creatures built from inanimate material and brought to life to serve their creators' purposes. They are complex constructs that can perform tasks beyond ordinary capabilities. Similarly, GLLMMs are advanced statistical models that handle complex data structures and relationships not easily tackled by simpler models.

The creation of a golem involves detailed planning and execution, often with specific words or symbols of power. Similarly, the development and application of GLLMMs require deep understanding and precise control over the model's specifications. The process requires a high level of expertise, akin to the knowledge and control needed to bring a golem to life and command it.

Golem stories often caution about unintended consequences: if improperly controlled or understood, a golem could wreak havoc. This allegory aligns with the use of GLLMMs; if misapplied or misunderstood, these models can lead to very damaging outcomes.

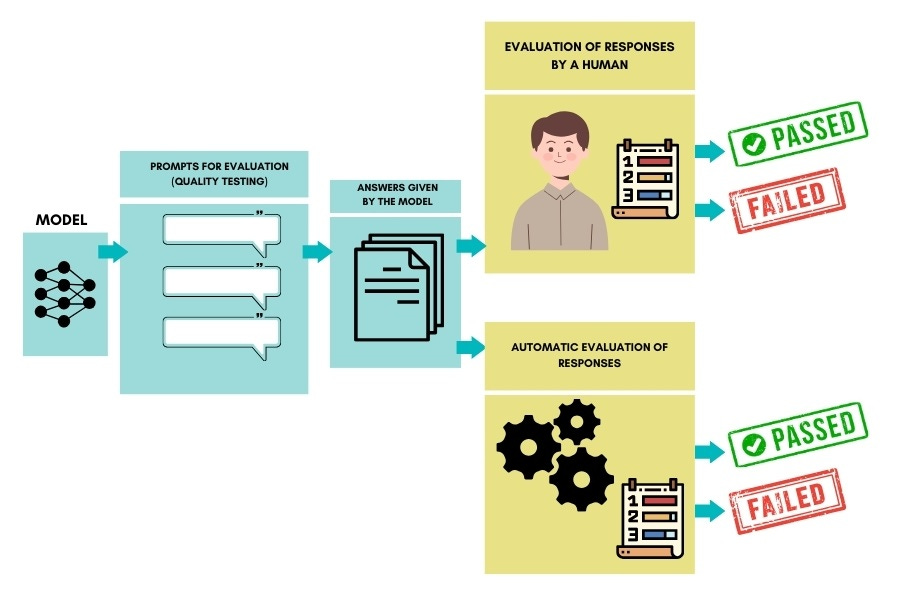

It is essential to understand what these models are and how they are built. But even more importantly to our publication, it is essential to understand why human feedback is necessary when building them, just like the picture above tries to show. Specifically, we will focus on and describe below the method we already mentioned in the introductory paragraphs, namely RLHF (Reinforcement Learning with Human Feedback).

It is through this feedback that alignment with human values happens. So RLHF is smack center within our viewpoint (as shown in the top diagram) that the LLMs are more than just technical marvels, that they are tools with profound implications for democracy and human values.

Understand that this human feedback given during the creation of these models is given by people employed by high-tech companies. How the resulting models are scrutinized for satisfying (future) governmental regulations is the subject of another article in the series, Governmental Regulations of AI.

LLMs and Democratic Values

Enhancing Public Discourse

The LLMs have the potential to enrich democratic processes by enhancing public discourse. They can provide nuanced, multi-perspective information, aiding citizens in making more informed decisions. For instance, they can generate accessible summaries of complex policy documents or visualize data in ways that make it easier for the public to understand intricate issues.

Challenges to Democratic Norms

However, the power of these models (LLMs and GLLMMs) comes with significant challenges. The ability of these models to generate realistic content can be exploited to create deepfakes or propagate misinformation. This poses a threat to the integrity of democratic processes, such as elections, where public opinion can be swayed by false information. Moreover, the algorithms driving these models might embed hidden biases, inadvertently perpetuating stereotypes and unequal representation in public discourse.

LLMs and Human Values

Reinforcing Human Creativity and Expression

On a positive note, the LLMs can significantly enhance human creativity and expression. Artists, designers, and content creators can collaborate with these models to explore new realms of creativity, blending human intuition with AI-generated content. This synergy can lead to unprecedented forms of art, literature, and multimedia content, enriching human culture and expression.

Ethical and Societal Implications

The deployment of the LLMs raises important ethical questions. As these models become more integrated into our daily lives, issues around privacy, consent, and authorship emerge. For instance, the use of someone's likeness or ideas without consent in AI-generated content can lead to ethical dilemmas. Additionally, as AI becomes more adept at mimicking human interaction, it is important to maintain clear boundaries to preserve the authenticity of human relationships and experiences.

What Does it Mean to Align AI (read LLMs) with Values?

By values, we mean the reconciled set of values. In the article Democracy And Human Values, we defined reconciled values to be a logically consistent (i.e. non-contradictory) set of combined democratic principles and human values. It is gotten through debate and compromise.

These reconciled values, in a broad sense, encompass the principles, standards, and qualities considered worthwhile or desirable by individuals living in a democracy. These values are not uniform across cultures or countries; they are deeply influenced by social, cultural, and historical factors, leading to a diverse range of priorities and ethical standpoints.

At its core, the reconciled set often includes fundamental values like respect for human life, fairness, justice, freedom, and autonomy. These principles are echoed in various international declarations and ethical frameworks, such as the Universal Declaration of Human Rights. Additionally, values like empathy, compassion, and cooperation are essential in creating social harmony and understanding. They are central to ethical theories like care ethics, which emphasizes the moral significance of caring for others and maintaining relational ties.

However, the challenge in obtaining a reconciled set of values lies in the pluralistic and sometimes conflicting nature of these values. For instance, the value of individual freedom may clash with collective welfare in certain contexts, requiring a nuanced approach to balance these competing interests. Moreover, the dynamic and evolving nature of human values, shaped by ongoing societal changes and ethical debates, adds another layer of complexity. This continuous evolution necessitates that AI systems are adaptable and responsive to changes in societal values and norms.

To address this alignment problem, interdisciplinary collaboration is crucial. Insights from philosophy, sociology, anthropology, and psychology can provide a deeper understanding of human values and their contextual nuances. Additionally, involving diverse stakeholders in the development and governance of AI ensures that a broad spectrum of values and perspectives is considered. This inclusive approach is vital in creating AI systems that are not only technically proficient but also ethically aligned with the diversity of human values.

For further reading, one might refer to "Moral Machines: Teaching Robots Right from Wrong" by Wendell Wallach and Colin Allen, which goes deeper into the challenges of embedding moral decision-making in AI. Additionally, "The Ethics of Artificial Intelligence" edited by Matthew Liao provides a comprehensive overview of ethical issues in AI, including the alignment problem.

How LLMs are Built

We will simplify now for the rest of the essay and focus on textual data only, thereby treating the entire class of LLMs, not just the stronger golems, because it makes alignment with human values easier to understand.

LLMs are trained on very large datasets, practically all the text published in digital form on the Internet. Their construction is a sophisticated, multi-stage process involving advanced neural network architectures and fine-tuning techniques.

Below is an overview of the stages involved in building these models, with minimal but unavoidable technical wording. You may choose to glimpse through and return later if the technical jargon is bothersome. But completely ignoring these steps is not recommended, because these AI systems are becoming part of our everyday lives. Here are the stages:

Data Collection and Preprocessing: Initially, large and diverse datasets are collected. Data preprocessing is crucial to ensure quality and diversity. It involves cleaning, normalization, and sometimes anonymization of data to comply with privacy standards.

Model Architecture Design: LLMs are built upon transformer architectures, known for their ability to handle sequential data and capture long-range dependencies. These architectures consist of layers of self-attention mechanisms that process input data in parallel, providing efficiency and scalability. The architecture is designed to handle multimodal inputs, meaning it can process and integrate different types of data simultaneously.

Pre-Training: The model undergoes an extensive pre-training phase on large datasets. This phase involves unsupervised learning (i.e. without humans in the loop), where the model learns to predict parts of the input data (like the next word in a sentence or the next pixel in an image). This stage is computationally intensive and forms the backbone of the model’s ability to generate coherent and contextually relevant outputs.

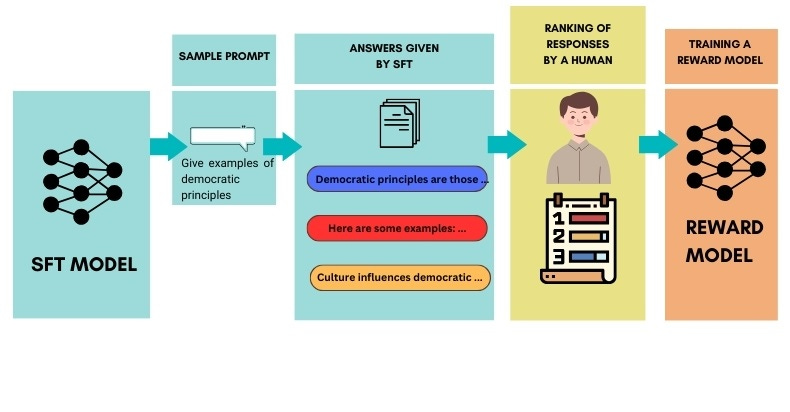

Fine-Tuning with Supervised Learning: After pre-training, the model is fine-tuned on more specific tasks or datasets. This stage often involves supervised learning, where the model is trained on labeled data to perform specific tasks like language translation, image generation, or question answering. Labeling is done by humans, the label being a response to a given prompt, i.e. what a human thinks the response should. By trying to get close to these (prompt, response) pairs, the pre-trained model gets fine-tuned. In the picture below, SFT stands for Supervised Fine-Tuned.

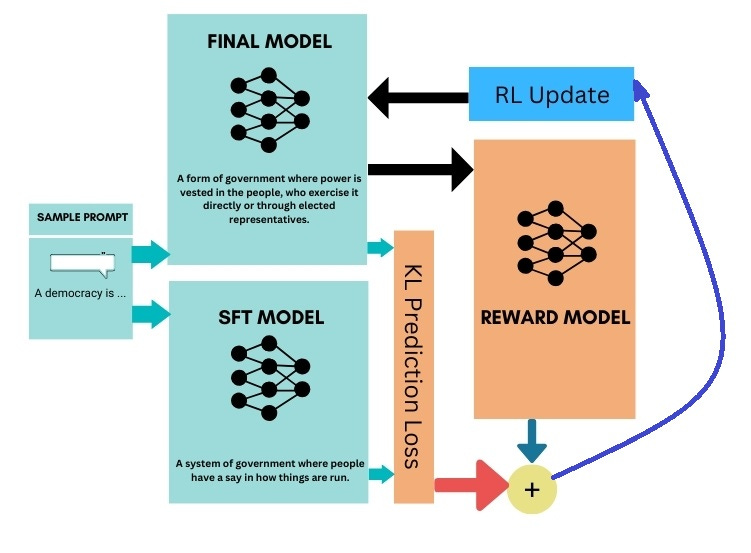

Reinforcement Learning from Human Feedback (RLHF): In this stage, the SFT model is further refined using human feedback. RLHF involves collecting data where humans rate the outputs of the model. This feedback is used to train a reward model.

The reward model then guides another step of fine-tuning, yielding the final model using reinforcement learning techniques. RLHF helps align the model's outputs with human values and expectations, ensuring that generated content is not only accurate but also ethically and contextually appropriate. Notice how the outputs of the SFT model and the final model are compared, mixed in with a reward, run through a Reinforcement Learning update, and then proceeding to refine the final model in a feedback fashion.

We summarize the above building of a model by human feedback in the following picture, which we will need later.

Evaluation and Iteration: The model's performance is rigorously evaluated using a variety of benchmarks and tests, including both automated metrics and human evaluations. This evaluation process helps identify areas for improvement. Based on these insights, further iterations of training and fine-tuning are conducted to refine the model's capabilities.

Deployment and Monitoring: Once the model achieves the desired performance level, it is deployed for real-world applications. Continuous monitoring is essential to ensure the model operates as intended, particularly in response to novel inputs or changing real-world conditions.

So, one can see from the steps above that building LLMs is a complex and resource-intensive process, requiring expertise in machine learning, data processing, and ethical AI practices. The integration of RLHF is particularly significant as it represents a shift towards more human-centered AI development, ensuring that these powerful models are aligned with human preferences and democratic norms.

A Deeper More Technical Description

For the steps outlined above to make more sense, the reader would need some basic knowledge of AI concepts, especially about neural networks and the backpropagation algorithm.

My website ARTIFICIAL INTELLIGENCE, Dreams and Fears of a Blue Dot has an article covering this background material: AI Blue Dot | Main Concepts. However, this knowledge is not absolutely necessary for understanding the rest of the essays in this publication. While the previous section (How LLMs Are Built) should not be skipped, you may choose to skip this more technical section.

For a technical description of LLMs here on Substack I recommend:

And thirdly, reader may consult Mark Riedl’s article on Medium: A Very Gentle Introduction to Large Language Models without the Hype.

For those readers who do want a more technical description, the best one that I know of is given in the video clip below. It has a few short periods of technical details that might bother the reader but it always and quickly returns to an informal tone, easy to understand.

The Relationship with Knowledge

Language Models are (Golemic) Interfaces to Knowledge

These powerful models, which can understand and generate both text and multimedia content, are often misconceived as repositories of knowledge. However, it is crucial to recognize that LLMs are, in essence, interfaces to knowledge, not knowledge itself. They are shaped by human values, reflecting human interpretations of reality rather than reality itself. Just like the powerful mythological golems.

Understanding LLMs as Interfaces to Knowledge

Richard Feynman helps us understand this distinction between language (the names of things) and the things themselves (the knowledge) .

To stress this point even further, LLMs are often seen not just as repositories of knowledge but are seen as omniscient (they know everything!). The term Golem seems to fit this more expansive view. This view has fed a fear of AI’s potential, in my view unnecessary. However, it is vital to understand that they are just sophisticated tools built to interact with the vast array of human knowledge. They do not 'know' in the human sense; they process and replicate patterns found in their training data. This distinction is crucial in appreciating their role and limitations.

Perhaps this interfacing role is best exemplified by the Wolfram plugin to the computational world. The plugin is a bridge between statistical inference of the LLMs (which is close to humans but can give wrong answers) and the rule-based computational world of hard knowledge (where wrong answers do not exist, relative to the knowledge system).

Human feedback (RLHF) is essential when building LLMs

We mentioned this in the introduction, but it is worth repeating here that the LLMs are more than just a technological breakthrough. In our publication “AI, Democracy, and Human Values” we treat them as instruments (=golems) that can shape our democratic fabric and reflect our human values. That is why the RLHF step in the building of such a model (step 5 above) is of utmost importance for us. It is this step that allows the LLMs to function as such instruments, just like the mythological golems created out of clay.

( Just like all posts have the same diagram at the top, they also have the same set of axioms at the bottom. The diagram at the top is about where we are now, this set of axioms is about the future.

Proposing a formal theory of democratic governance may look dystopian and infringe on a citizen’s freedom of choice. But it is trying to do exactly the opposite, enhancing citizen's independence and avoiding the anarchy that AI intrusion on governance will bring if formal rules for its behavior are not established.

One cannot worry about an existential threat to humanity and not think of developing AI with formal specifications and proving formally (=mathematically) that AI systems do indeed satisfy their specifications.

These formal rules should uphold a subset of democratic principles of liberty, equality, and justice, and reconcile them with the subset of core human values of freedom, dignity, and respect. The existence of such a reconciled subset is postulated in Axiom 2.

Now, the caveat. We are nowhere near such a formal theory, because among other things, we do not yet have a mathematical theory explaining how neural networks learn. Without it, one cannot establish a deductive mechanism needed for proofs. So it will be a long road, but eventually we will have to travel it. )Towards a Formal Theory of Democratic Governance in the Age of AI

Axiom 1: Humans Have Free Will

Axiom 2: A consistent (=non-contradictory) set of democratic principles and human values exists

Axiom 3: Citizens are endowed with a personal AI assistant (PAIA), whose security, convenience, and privacy are under citizen’s control

Axiom 4: All PAIAs are aligned with the set described in Axiom 2

Axiom 5: A PAIA always asks for confirmation before taking ANY action

Axiom 6: Citizens may decide to let their PAIAs vote for them, after confirmation

Axiom 7: PAIAs monitor and score the match between the citizens’ political inclinations and the way their representatives in Congress vote and campaign

Axiom 8: A PAIA upholds the citizen’s decisions and political stands, and never overrides them

Axiom 9: Humans are primarily driven by a search for relevance

Axiom 10: The 3 components of relevance can be measured by the PAIA. This measurement is private

Axiom 11: Humans configure their PAIAs to advise them on ways to increase the components of their relevance in whatever ways they find desirable

Axiom 12: A PAIA should assume that citizen lives in a kind, fair, and effective democracy and propose ways to keep it as such

More technical justification for the need for formal AI verification can be found on the SD-AI (Stronger Democracy through Artificial Intelligence) website:articles related to this formalism are stored in SD-AI’s library section

Thanks for this informative article and the compelling metaphor of the golem.